Earlier this week I practised knee surgery, attended a conference in Dubai and watched people arrange invisible furniture – all in a basement in east London. The occasion was an event entitled ‘Techspectations: Mixed Reality: HoloLens, Haptics & Beyond’, hosted by EngageWorks at its Flux Lounge facility.

Amelia Kallman, head of innovation at Engage Works, explained that mixed reality can be seen as a step on from both virtual reality and augmented reality. VR, she said, uses a single headset that immerses the user in a virtual world but excludes them from the physical world around them; while AR adds a layer of information or basic interactivity onto the physical world. Mixed reality, by contrast, overlays a virtual world on the physical world that users can interact with, while maintaining face-to-face contact. Forecasters have valued the MR market at $90 billion by 2020, compared with $30bn for the AR market.

Richard Vincent, founder of FundamentalVR, echoed Facebook’s Mark Zuckerberg in saying that he believes VR/AR/MR to be “the next computing platform”, following on from PCs and mobile devices. He went on to compare today’s VR technology, such as the Oculus Rift, with the ‘brick’ mobile phones of the mid-1980s – a significant step forward at the time, but offering just a hint of what was to come.

FundamentalVR had a demonstration of VR and haptic technology at the event, showing how anaesthetists can practice an injection required in knee surgery. Equipped with a VR headset and a haptic pen, I had a go. Different surfaces gave different tactile feedback when I touched them with the virtual needle – bone felt hard, while other tissue types offered varying degrees of resistance. Vincent added that VR is the best medium for individual instruction of this kind, while group demonstrations are better suited to MR. It occurred to me that, while it is often said that future developments have the potential to take away people’s livelihoods, this was the first time I had seen a technology that might even make cadavers redundant.

Max Doelle, digital strategist and chief prototyper (“which means I get paid to play with this stuff”) at innovation studio Kazendi, talked about Microsoft’s HoloLens, which has been available since the spring in North America, and for which orders are now being taken in Europe. Unlike many of the other devices in this area, HoloLens has onboard processing and so can operate without an external computer. It runs Windows 10 and so can use any app designed for that operating system. It has separate lenses for red, green and blue light, and creates a 3D image directly on the retina. Small speakers in the headset provide 3D audio.

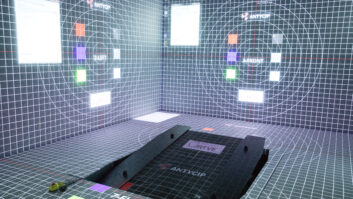

HoloLens uses a number of different interactions: world co-ordinates; gaze input (when I tried it, I found it surprisingly unintuitive to force myself to look directly at something to be able to select it); gestural input (setting up a dual-HoloLens demo, Doelle reminded me of a mime artist); voice input, using the Cortana virtual assistant; spatial sound; and spatial mapping – once HoloLens is activated it starts mapping its surroundings, and it remembers them when it is next switched on in the same location.

HoloLens enables you to add 3D objects and animations into the space around you, which you can then walk around and interact with. In the two-device demo (the UK’s first, apparently), Doelle showed various members of the audience how to bring virtual tables and chairs into the room, and measure distances and areas. The output of one of the devices was displayed on a videowall behind the presentation, so the audience could follow what was happening. It was slightly disappointing that, when a virtual lamp was picked up, the app didn’t recognise the table top as a usable surface, so this too had to be placed on the floor. (Doelle said that was a feature for a future release of the software.)

Another demonstration at the event used the HTC Vive VR device to create a virtual meeting room. Kitted out with a Vive headset, headphones and two handheld controllers, I was able to participate as a 3D avatar in a virtual meeting with an Engage Works employee in Dubai, and select a PowerPoint to run on the display in the virtual room. While this in itself didn’t seem especially useful, it struck me that a more fully featured virtual meeting with multiple participants could well mimic the real-world equivalent in a way that feels more natural than a video call.

But for me, the star of the day was HoloLens. I was impressed by its all-in-one nature; and by the fact that, because you can still see your surroundings, there is little chance of motion sickness (although Vincent pointed out that, if the headset isn’t adjusted properly, there can be some ‘bleed’ around the virtual image which can be disorientating). I liked Doelle’s point that richer content that involves more of the senses leads to better understanding and better learning. And I’m intrigued that it offers, as Doelle put it, “unlimited screen estate” – which is something that could make it very appealing to financial traders or control room personnel, for instance.

Is VR/AR/MR the next computing platform? My suspicion is that just as we haven’t thrown out our computers and replaced them with mobile devices, these new technologies will supplement rather than replace existing solutions. If it turns out that we are only at the brick phone stage, then the future looks to be a very exciting one.

Engage Works – interconnected experiences

Fundamental VR – virtual experiences

Lean innovation studio Kazendi